The Growing Importance of Wikipedia (or, 'AI Search doesn't think you exist')

Bad news down under - the next generation of search engines doesn’t think the NRA of Australia exists.

Last year I wrote about the contributions I had been making to Wikipedia and the impact on search results and - potentially - public perceptions of shooting sports that came from ensuring publicly available information was accurate and comprehensive.

With the recent developments in “AI” Large Language Models (namely ChatGPT), this has taken on a new urgency.

I noted that Wikipedia and sister projects like Wikidata are leaned on heavily by search engines and other algorithmic systems, and that the shortage of reliable published information about shooting sports (and National Rifle Associations that aren’t the NRA of America) was biasing output. Algorithms can only parse what exists. If you don’t exist on the internet, then you don’t exist in Google. This is a Knowledge Reporting Bias, which is basically a form of Sampling Bias. If someone comes along and asks Google about shooting sports in the UK, then Google is going to go looking for answers. If it can’t find any, then it will latch onto the next plausibly relevant thing. That might be the NRA of America; it might be an article about a mass shooting. It is important to remember that computers are stupid, and will do exactly what they’re asked to do, even if it wasn’t what you meant to ask them. In trying to be helpful, they will cheerfully dredge up irrelevant results and misleading articles, or even conspiracy theories which somehow seem related to your topic of interest.

Enter the Machine

Late last year, OpenAI released ChatGPT. ChatGPT is a Large Language Model (despite the branding, it’s not really an AI - it’s not actually intelligent and doesn’t understand what it’s writing), and will cheerfully generate plausible - even convincing - text with limited regard to accuracy. Computer scientist Alexander Hanff has outright called for ChatGPT to be destroyed after it repeatedly told him he was dead, and then provided URLs to his obituaries - URLs which didn’t exist. URLs that ChatGPT had hallucinated. Make no mistake, ChatGPT is not merely a “natural language” chat layer on top of an index of verifiable facts. As a large language model, ChatGPT simply puts one plausible word after another, with some reference to an advisory back-end knowledge store. If the next plausible thing should be a URL to an obituary, then that’s what it will provide - regardless of whether a supporting URL exists. If it does, then great. If not, it’ll hallucinate one to support its fallacious argument. Think of it like Boris Johnson.

Does this matter? Does it matter if a novelty like ChatGPT is a bit flaky? Well it wouldn’t, except Bing is now implementing a Chat AI helper into their search, and DuckDuckGo have just announced DuckAssist. And if you think “yeah, but who uses Bing?” then Google are hot on their heels with “Bard”, but it’s not widely available to play with yet. All these use tweaked versions of the same basic OpenAI platform that ChatGPT is built on. (Google are quick to state that Bard isn’t supposed to be used for search - “it’s a language model, not a knowledge model” - but they also accept people are probably going to try to use it for search, and generative dialogue will involve answering questions).

How does AI Search fare?

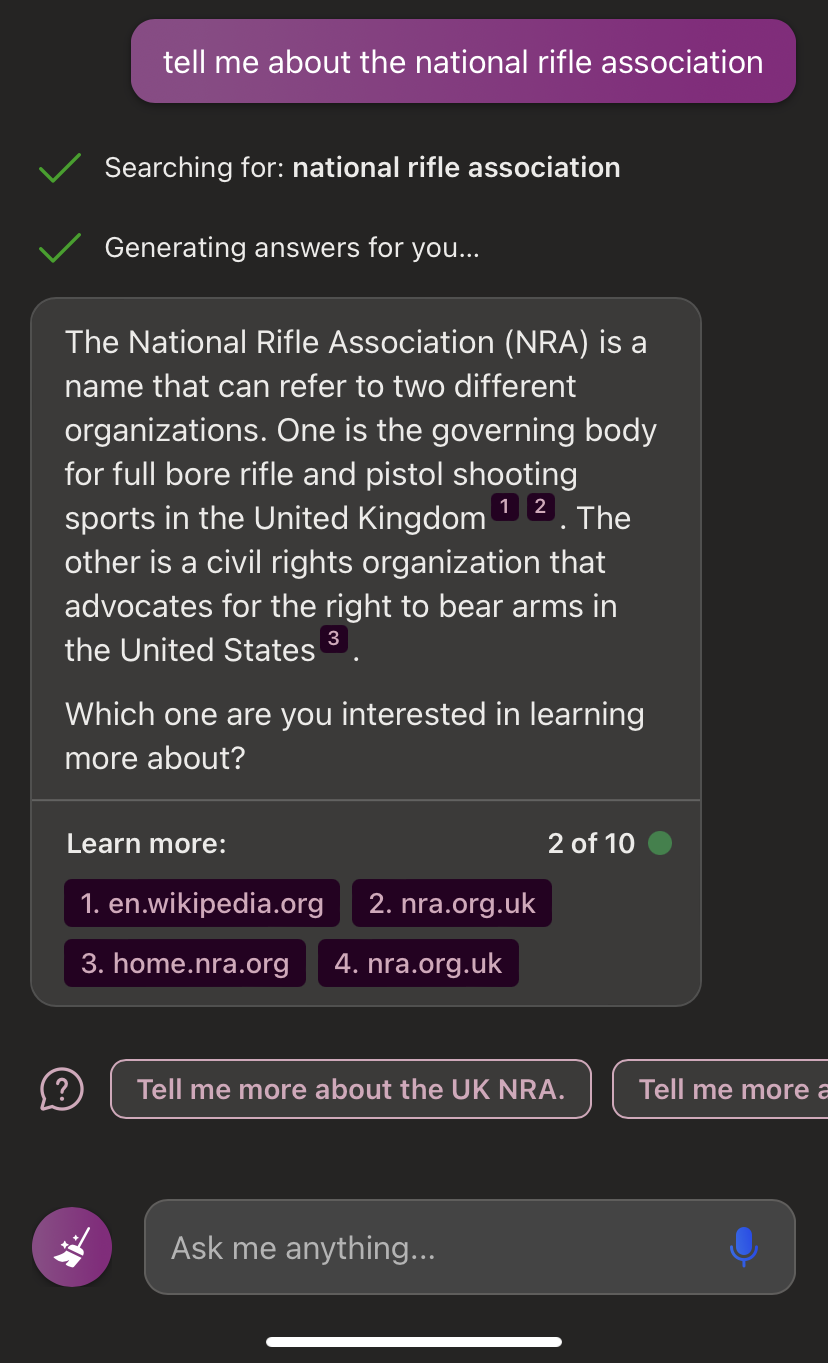

I asked Bing’s Chat AI to “tell me about the national rifle association”. Bing confidently told me there were two organisations with that name - one in Britain and one in the US. This is wrong, and the error is made despite other NRAs being indexed in Bing Search and present in Wikipedia (albeit with very short, poorly cited articles).

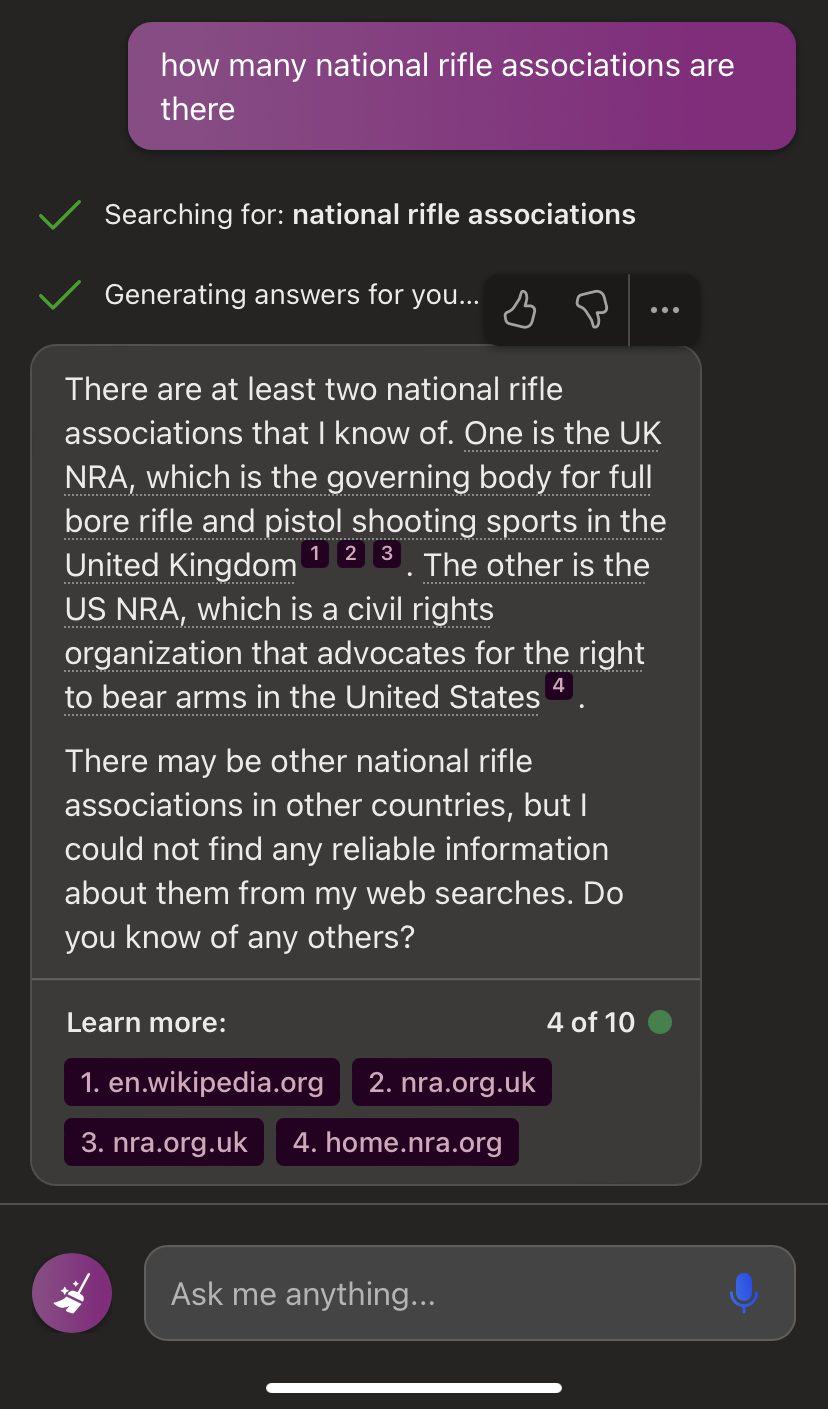

When I reset the session and asked “How many national rifle associations are there?” Bing became more circumspect and told me “There are at least two national rifle associations that I know of. … There may be others but I could not find any reliable information about them from my web searches”.

This should set off alarm bells for the NRAs of India, Australia and New Zealand. Sorry guys, but your online presence isn’t considered reliable. The algorithms aren’t really sure you exist! Now this does not reflect on the websites or SEO of those sites particularly (the NRAA site in particular is very good), but more on the surrounding environment - how often are they discussed in media, how well documented in trusted identity sources like VIAF, library indexes or Wikipedia.

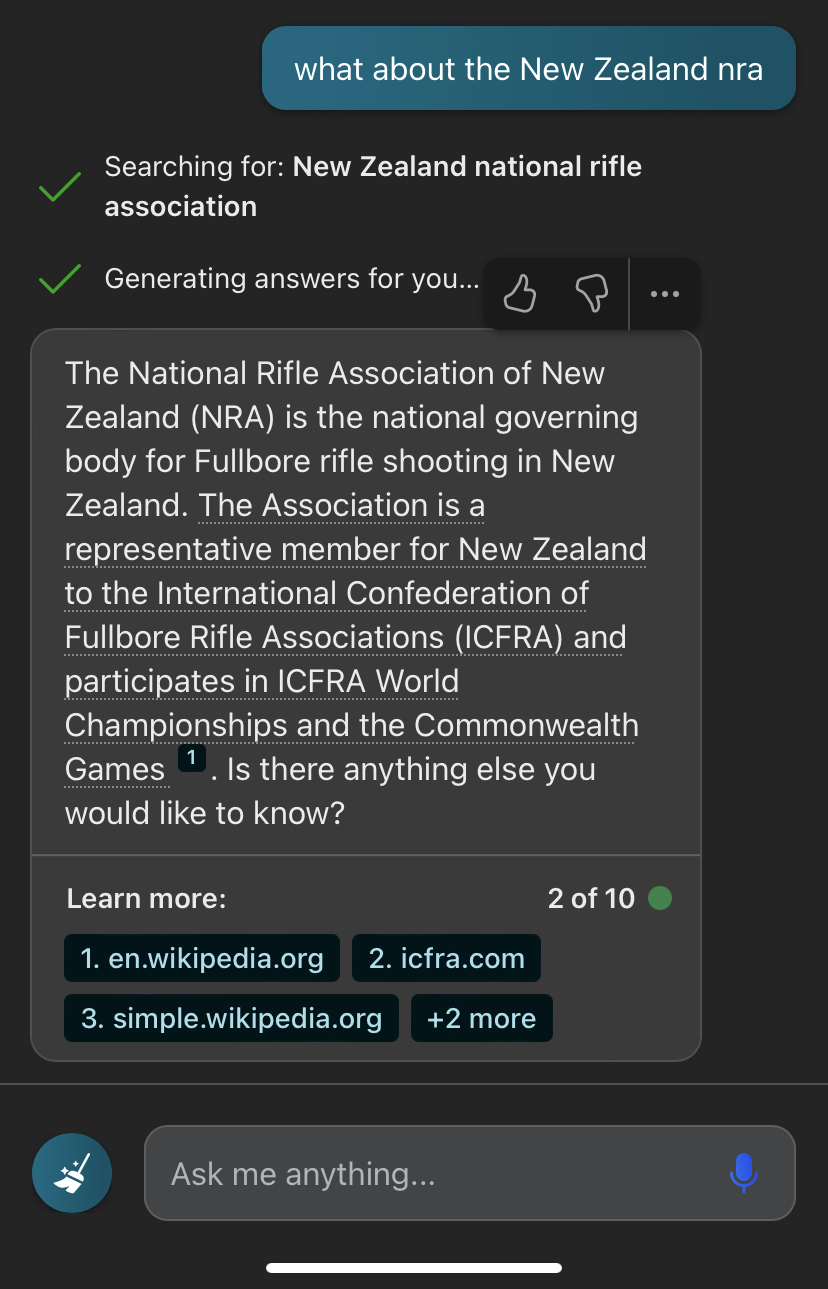

So I asked directly about another NRA. And Bing went hunting. And it copy-pasted the text from Wikipedia (that I wrote!).

Now, I haven’t played with DuckAssist yet, but it’s based off the same OpenAI model as Bing, so the results are likely to be similar - just more private and based on DDG’s search database. What’s interesting is that in the DuckAssist launch announcement, DDG explicitly mention their reliance on Wikipedia, and how they intend to use wikipedia to avoid the “hallucinations” that Alexander Hanff experienced. But this means if you’re not in there, you don’t exist (and if you don’t have a bunch of citations, you probably aren’t relevant).

How does it work?

Generative AI technology is designed to generate text in response to any prompt, regardless of whether it “knows” the answer or not. However, by asking DuckAssist to only summarize information from Wikipedia and related sources, the probability that it will “hallucinate” — that is, just make something up — is greatly diminished. In all cases though, a source link, usually a Wikipedia article, will be linked below the summary, often pointing you to a specific section within that article so you can learn more.

Why Wikipedia?

We’ve used Wikipedia for many years as the primary source for our “knowledge graph” Instant Answers, and, while we know it isn’t perfect, Wikipedia is relatively reliable across a wide variety of subjects. Because it’s a public resource with a transparent editorial process that cites all the sources used in an article, you can easily trace exactly where its information is coming from.

So Wikipedia is definitely important. It’s being used to validate and inform search results, and increasingly is going to be used as a source-of-truth for AI Search. And that search is currently full of holes. It’s making confident, inaccurate statements that have to be challenged.

If the future of Search is a large language model repackaging the contents of Wikipedia, then it’s in the interest of everyone to ensure Wikipedia is right.

We could also of course argue that they simply shouldn’t, and they should build a more comprehensive knowledge model (utilising Wikipedia, but not so dependent on it). This is fair, but also not going to happen - but if you want to scream into the hurricane, then by all means - you do you.

So I repeat my call from October:

- Go and expand the articles for your national governing body. Add citations (from the stack of journals in your cupboard which you’ve been hoarding and I don’t have access to!). Ensure that accurate and informative information is available.

- If you have a stack of photos from national or international events, consider uploading some of them to Wikimedia Commons, particularly if they can be used to support Wikipedia articles.

- Governing Bodies - Upload back-editions of your print journals to your websites so that people can find citations (props to the NRA and NRAA here, also props to the NRAA for some cracking historical articles on their ATR site). Also consider uploading back editions to the Internet Archive as a collection. Although the IA would love you to license them out as Creative Commons, it’s also possible to reserve all rights and just make them available for download. This serves as a backup to your self-hosted copies, gets them wider visibility and the IA also run OCR on them so that they can be properly indexed and searched digitally.